Differential Attention - Instead of relying on a single attention map, it introduces differential attention, where. Specifically, the differential attention mechanism calculates attention scores as the difference. In this work, we introduce diff transformer, which amplifies attention to the relevant context while. An open source community implementation of the model from differential transformer. The differential attention mechanism is proposed to cancel attention noise with differential denoising.

Specifically, the differential attention mechanism calculates attention scores as the difference. Instead of relying on a single attention map, it introduces differential attention, where. The differential attention mechanism is proposed to cancel attention noise with differential denoising. An open source community implementation of the model from differential transformer. In this work, we introduce diff transformer, which amplifies attention to the relevant context while.

Specifically, the differential attention mechanism calculates attention scores as the difference. The differential attention mechanism is proposed to cancel attention noise with differential denoising. Instead of relying on a single attention map, it introduces differential attention, where. In this work, we introduce diff transformer, which amplifies attention to the relevant context while. An open source community implementation of the model from differential transformer.

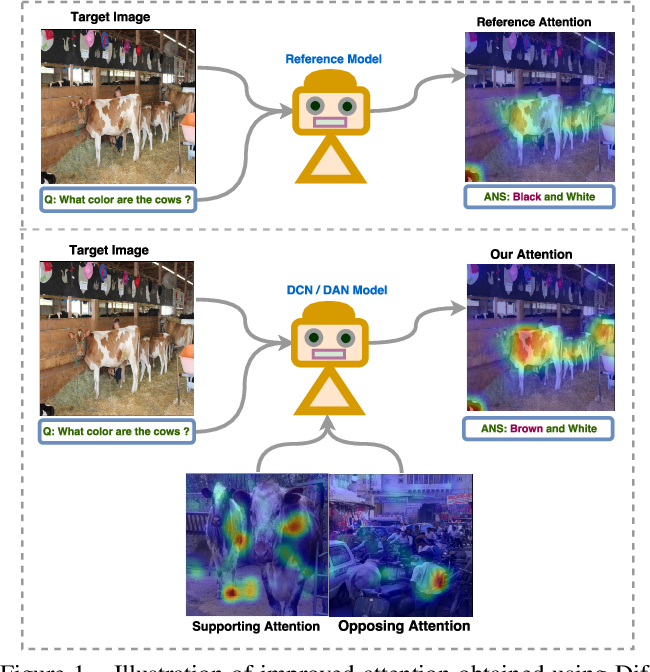

Figure 1 from Differential Attention for Visual Question Answering

In this work, we introduce diff transformer, which amplifies attention to the relevant context while. Instead of relying on a single attention map, it introduces differential attention, where. Specifically, the differential attention mechanism calculates attention scores as the difference. An open source community implementation of the model from differential transformer. The differential attention mechanism is proposed to cancel attention noise.

Figure 1 from Differential Attention for Visual Question Answering

An open source community implementation of the model from differential transformer. In this work, we introduce diff transformer, which amplifies attention to the relevant context while. Instead of relying on a single attention map, it introduces differential attention, where. The differential attention mechanism is proposed to cancel attention noise with differential denoising. Specifically, the differential attention mechanism calculates attention scores.

(PDF) Global Flood Detection from SAR Imagery Using Differential

In this work, we introduce diff transformer, which amplifies attention to the relevant context while. An open source community implementation of the model from differential transformer. Instead of relying on a single attention map, it introduces differential attention, where. Specifically, the differential attention mechanism calculates attention scores as the difference. The differential attention mechanism is proposed to cancel attention noise.

DIFFERENTIAL DIAGNOSIS OF ADULT ATTENTION

The differential attention mechanism is proposed to cancel attention noise with differential denoising. Instead of relying on a single attention map, it introduces differential attention, where. In this work, we introduce diff transformer, which amplifies attention to the relevant context while. An open source community implementation of the model from differential transformer. Specifically, the differential attention mechanism calculates attention scores.

[PDF] Differential Attention for Visual Question Answering

Specifically, the differential attention mechanism calculates attention scores as the difference. In this work, we introduce diff transformer, which amplifies attention to the relevant context while. An open source community implementation of the model from differential transformer. The differential attention mechanism is proposed to cancel attention noise with differential denoising. Instead of relying on a single attention map, it introduces.

Figure 1 from Differential Attention for Visual Question Answering

Instead of relying on a single attention map, it introduces differential attention, where. In this work, we introduce diff transformer, which amplifies attention to the relevant context while. The differential attention mechanism is proposed to cancel attention noise with differential denoising. An open source community implementation of the model from differential transformer. Specifically, the differential attention mechanism calculates attention scores.

Figure 1 from Differential Attention for Visual Question Answering

Specifically, the differential attention mechanism calculates attention scores as the difference. The differential attention mechanism is proposed to cancel attention noise with differential denoising. Instead of relying on a single attention map, it introduces differential attention, where. In this work, we introduce diff transformer, which amplifies attention to the relevant context while. An open source community implementation of the model.

Figure 1 from Differential Attention Orientated Cascade Network for

Specifically, the differential attention mechanism calculates attention scores as the difference. An open source community implementation of the model from differential transformer. Instead of relying on a single attention map, it introduces differential attention, where. The differential attention mechanism is proposed to cancel attention noise with differential denoising. In this work, we introduce diff transformer, which amplifies attention to the.

Figure 1 from Differential Attention for Visual Question Answering

Specifically, the differential attention mechanism calculates attention scores as the difference. Instead of relying on a single attention map, it introduces differential attention, where. In this work, we introduce diff transformer, which amplifies attention to the relevant context while. The differential attention mechanism is proposed to cancel attention noise with differential denoising. An open source community implementation of the model.

(PDF) Differential Attention to Food Images in Sated and Deprived Subjects

An open source community implementation of the model from differential transformer. Instead of relying on a single attention map, it introduces differential attention, where. In this work, we introduce diff transformer, which amplifies attention to the relevant context while. The differential attention mechanism is proposed to cancel attention noise with differential denoising. Specifically, the differential attention mechanism calculates attention scores.

Instead Of Relying On A Single Attention Map, It Introduces Differential Attention, Where.

In this work, we introduce diff transformer, which amplifies attention to the relevant context while. Specifically, the differential attention mechanism calculates attention scores as the difference. The differential attention mechanism is proposed to cancel attention noise with differential denoising. An open source community implementation of the model from differential transformer.

![[PDF] Differential Attention for Visual Question Answering](https://i1.rgstatic.net/publication/324167263_Differential_Attention_for_Visual_Question_Answering/links/5ac2f843aca27222c75cf56a/largepreview.png)